Gigapixel images are great. The level of detail, the scope of the images and the sheer amount of data they represent are all fascinating. Viewing them in an enjoyable and efficient manner is another issue entirely. As digital cameras get better and computers become faster, stitching ever larger images becomes possible. In late 2009, a 26 gigapixel image of Dresden in Germany was published online, and currently a gigapixel-image of Paris is the world's largest gigapixel image. However, looking at these or pretty much any other gigapixel image online, you are faced with a small viewer confined to your browser window. Can we do better?

I work at the Display Wall laboratory at the Department of Computer Science, University of Tromsø located in Northern Norway. The Display Wall lab is home to a 22 megapixel display wall, constructed from 28 projectors and driven by a display cluster of about 30 nodes (28 to do the actual graphics, and another few to do other tasks). Each projector creates a 1024x768 resolution image, which when tiled together with the others form a 7168x3072 resolution display.

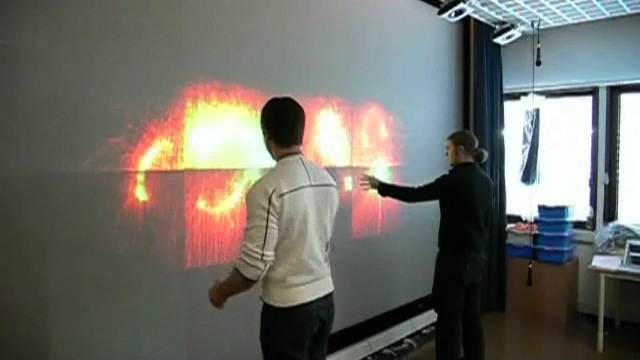

During the Fall and Winter of 2009, we contacted Eirik Helland Urke, who had recently published a gigapixel image of Tromsø on his website, www.gigapix.no. You can navigate that image for yourself here (albeit with the previously stated "in a tiny browser window" caveat). A fellow graduate student of mine, Tor-Magne Stien Hagen, went to work on building a viewer for gigapixel-scale images on the display wall. We combined his WallScope system with my Interaction Spaces system for device- and touch-free interaction with the display wall, and the result was a very smooth and enjoyable experience for navigating very, very high-resolution images. More technical details will follow as we have time to write them down. We also have a paper coming up - stay tuned.

Update! I have added a FAQ below the video.

|

Zooming into the gigapixel image of Tromsø on the display wall.

|

Frequently asked questions

Since I put this page online, I've noted that there are a number of recurring questions that people have. Here are some answers:

- Why do the projectors differ so much in color, brightness and contrast?

There are several reasons for this. First, our display wall is about five-six years old at this point, and several of the projector bulbs have far exceeded their expected lifetime. Dark projectors are typically a result of very old bulbs. Second, while there are many approaches to color-correcting projectors, we have decided to keep things simple. We try to focus on doing novel research, and not so much on re-implementing stuff that others have already done - we'll leave that part to the industry. Finally, our display wall is constructed from commodity components. Rather than, for instance, spend thousands of dollars per projector, we have used cheaper equipment wherever possible. This goes for both projectors, computers and the cameras used by the no-touch Interaction Spaces system.

- Why haven't you spent more time lining up the projectors, making them fully seamless?

The answer to this question is related to the answer above. Again, there are many approaches to blending the edges of projected images, for instance Paul Bourke's edge blending of commodity projectors. We had to choose between higher resolution and imperfect edge blending, or perfect edge blending and lower resolution. Another factor is performance, and the fact that all applications need to be modified to take the edge blending configuration into account. Our computers are not exactly bleeding edge any more, so we need to be extra careful so as to maintain good performance for apps on our display wall. (Personally, I can't wait for new hardware!)

- Why did you choose projectors and not flat-panel displays?

Projectors have one big advantage over flat-panel displays: They have no bezels. We really don't like bezels, and think that they ruin the experience of a wall-sized display. Display manufacturers are getting ever closer to releasing displays without bezels, but we're still not quite there. The downsides to using projectors are many, however: They generate a lot of heat and noise, their resolution is far lower than what can be achieved using flat-panel displays, their brightness and color properties differ from projector to projector, and they require a lot of space behind the display wall in order for the projector to throw a properly-sized image. Still, we didn't want bezels...

- So what are the specifications of your display wall?

We have a roughly 31 node display cluster. A frontend and the 28 display nodes take care of generating graphics and coordinating the computers. The computers run the Rocks cluster Linux distribution, version 4, and are all Dell Precision 370 workstations (Intel Pentium 4 EM64T at 3.2 GHz, 2 GB RAM, HyperThreading, NVIDIA Quadro FX 3400 with 256 MB VRAM on a PCI Express x16 bus). The cluster is interconnected using switched, gigabit Ethernet. Note that everything here is commodity: No super-fast network interconnect, not particularly powerful hardware (our computers are as old as the wall - about five or six years). We also have a storage node as part of the cluster, and a computer with lots of serial ports to control the projectors (basically a glorified, computerized on/off switch).

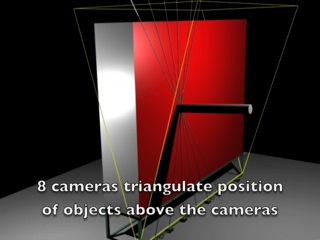

- And the interaction system? What are the specs?

The Interaction Spaces system which is what enables touch-free, multi-point interaction with the display wall, is built using 8 Mac minis (Intel Core Duo at 1.66 GHz, 512 MB RAM, integrated Intel GMA 950 GPU with 64 MB shared VRAM) running Mac OS X 10.4.9 (the software runs fine on newer releases as well. We don't need that at present, though). Each Mac mini is connected to two Unibrain Fire-i Firewire cameras. The Mac minis process image data to locate objects in one dimension (along a single line), and then pass that on to a coordinator that triangulates object locations in two and three dimensions. Read more about the Interaction Spaces system here.

- You guys HAVE to use this to play games!

Actually, we already have! One of our first projects was to play Quake 3 Arena on the display wall. You can see Quake 3 Arena in action near the end of this video: Hybrid vision- and sound-based interaction on display walls. And if you're wondering: The performance is great, but due to the way we run it on the display wall, there is a slight bit of lag. (In essence, we run several spectators for each player, and modify each spectator's view so as to match the projector that spectator is running on. You can read more in the paper.) We have also used it to play Homeworld 1.

|